Extending Lubelogger

I recently found a neat little self-hosted projcet called LubeLogger, which is sorta like a vehicle management tool. This does some neat things like recording my fuel usage (which I've been doing for years in a spreadsheet), tracking maintenance and repairs, and scheduling reminders based on milage and/or time.

The only thing it didn't do out of the box was handle tire wear. I have summer and winter tires for my vehicles, and I like keeping track of how much distance they've travelled.

This was raised as a feature request in the github repo (https://github.com/hargata/lubelog/issues/382), and was "solved" by adding tags to the odometer entries in the app. This is fine, but my "workflow" doesn't have me journaling odometer entries - instead, I am capturing fuel fillups, services and repairs.

What am I doing..

In my ongoing effort to write shit down so I don't forget how I wired up bits..

I wanted a way to automate the tagging of the odometer entries, or at least remind me to make some corrections (this is probably where I start..)

Lubelogger runs as a container in my Kubernetes cluster. Storage is in Ceph, and the database is in a common CNPG Postgres cluster. Updates are handled by a nightly run of Renovate, which is cool, beacuse it'll handle container image updates just the same as it will python library updates.

I'm planning to write this fix in Python and run it as a container image that will be called at some frequency.

Getting Started

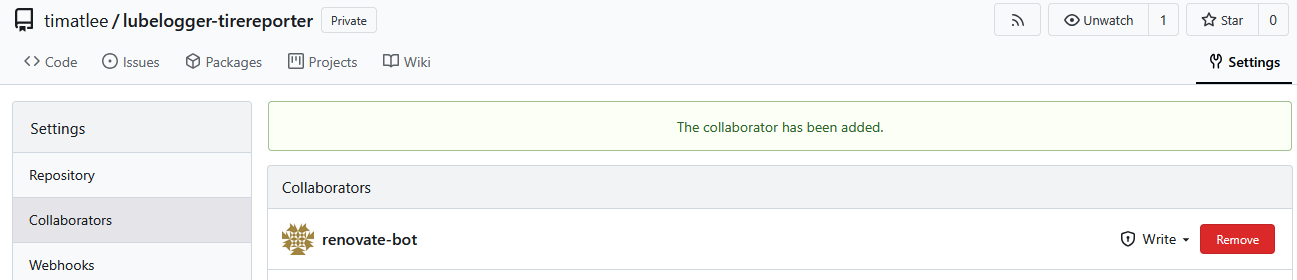

All this stuff is stored in an on-prem instance of Gitea. So, first I'll create a repo for this project, and share it with the renovate-bot user:

Next, scaffold out the python application and docker bits;

- Create a Virtual Environment for all this (this lands in

.venv). See the Python docs for this, but VScode makes this trivial. - Create an empty

requirements.txtfile. We'll use this in a moment withDockerfile. - Create the application folder and it's

__main__.pyfile:

1import logging

2from os import environ

3

4if __name__ == "__main__":

5 print("Hello from __main__")

- Create

Dockerfile(adjust as you need):

1FROM python:slim

2

3WORKDIR /app

4

5ENTRYPOINT ["python3", "-m", "lubelogger-tirereporter"]

6CMD []

7

8# Install requirements in a separate step to not rebuild everything when the requirements are updated.

9COPY requirements.txt ./

10RUN pip install -r requirements.txt

11

12COPY . .

- Set up a build task in VSCode to make your container image. This landed in

.vscode/tasks.json:

1{

2 "version": "2.0.0",

3 "tasks": [

4 {

5 "label": "Build Docker Image",

6 "type": "shell",

7 "command": "docker build -t lubelogger-tirereporter .",

8 "problemMatcher": []

9 }

10 ]

11}

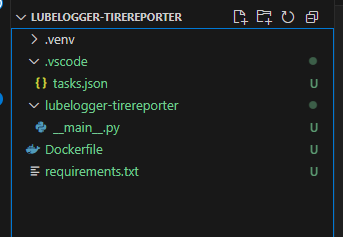

The file structure winds up looking like the following:

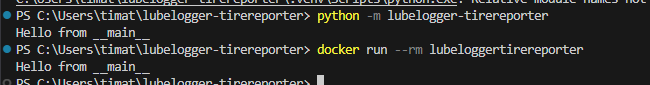

Test that your environment is somewhat healthy:

- Make sure the docker image can be built (either use the VSCode task, or run something like

docker build -t lubelogger-tirereporter .from the project directory) - Running the image gives some sort of output (try with

docker build -t lubelogger-tirereporterwhich should output "Hello from main") - Running the Python script directly gives you the same output

Automating Container Builds

This is where I start to get a bit hazy :P. My objective here is that whenever I push to gitea, I want the gitea runner to build the image, and upload the image to itself (since gitea can run as a container registry).

This depends on setting up action runners in Gitea (https://docs.gitea.com/usage/actions/act-runner) which is already setup. If you're using Github, you have this available to you already with some minor changes.

Create a .gitea/workflows/build.yaml directory and file:

1name: Build and Push Docker Image to Gitea Packages

2

3on:

4 push:

5 branches:

6 - main

7 pull_request:

8 branches:

9 - main

10

11jobs:

12 build:

13 runs-on: ubuntu-latest

14 container:

15 image: catthehacker/ubuntu:act-latest

16 steps:

17 # Checkout the repository

18 - name: Checkout code

19 uses: actions/checkout@v4

20

21 # Set up Docker

22 - name: Set up Docker

23 uses: docker/setup-buildx-action@v3

24

25 # Log in to Gitea Docker Registry

26 - name: Log in to Gitea Docker Registry

27 uses: docker/login-action@v3

28 with:

29 registry: git.timatlee.com

30 username: ${{ secrets.REGISTRY_USERNAME }}

31 password: ${{ secrets.REGISTRY_TOKEN }}

32

33 # Build and push the image

34 - name: Build and Push image

35 uses: docker/build-push-action@v6

36 with:

37 context: .

38 push: true

39 tags: git.timatlee.com/timatlee/lubelogger-tirereporter:latest

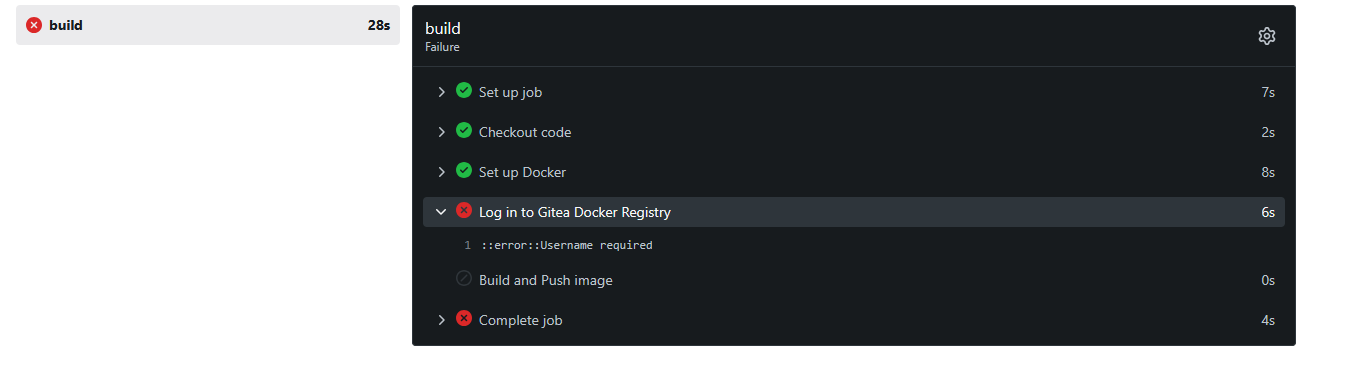

and without setting secrets.REGISTRY_USERNAME and secrets.REGISTRY_TOKEN, we expect failure.. and not left dissapointed:

So, off to gitea to make some secrets.

Add the REGISTRY_USERNAME secret (which is my username in gitea), and re-run the action - it's now failing on a missing password, which is expected.

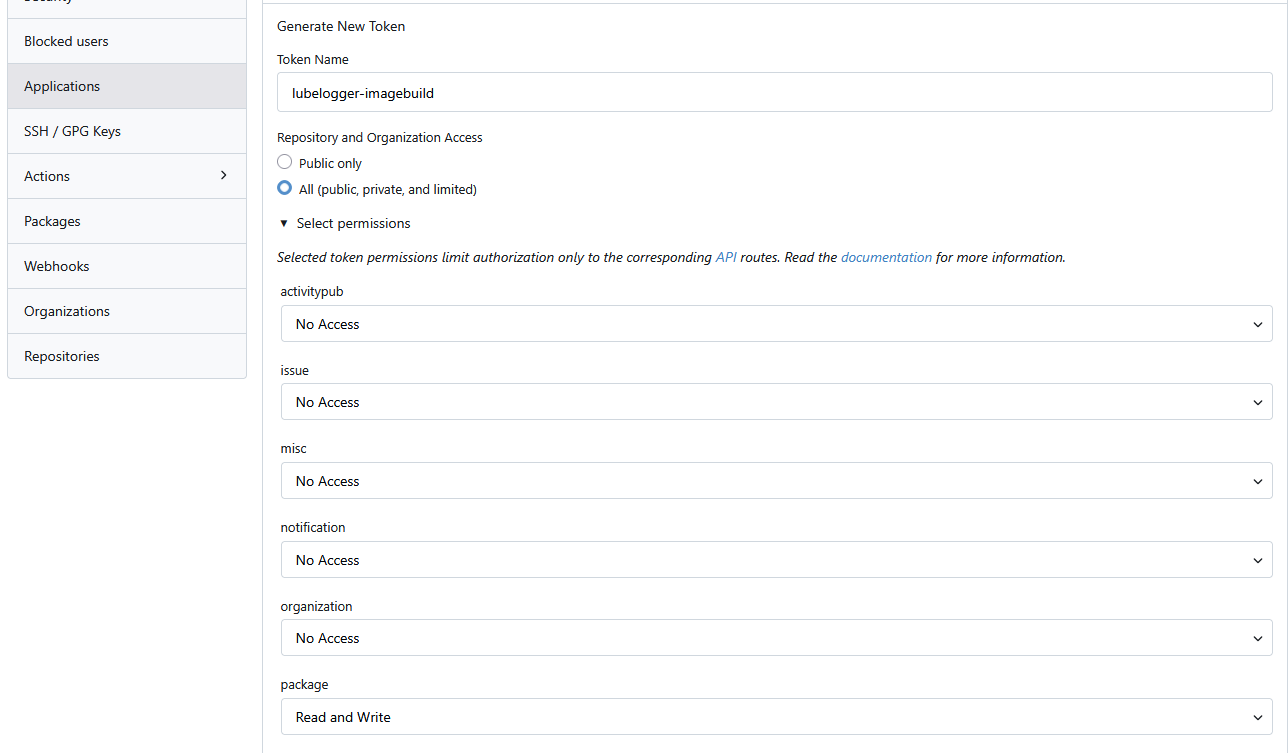

In Gitea, Settings, Applications, I create a new Token called lubelogger-imagebuild with package Read/Write enabled:

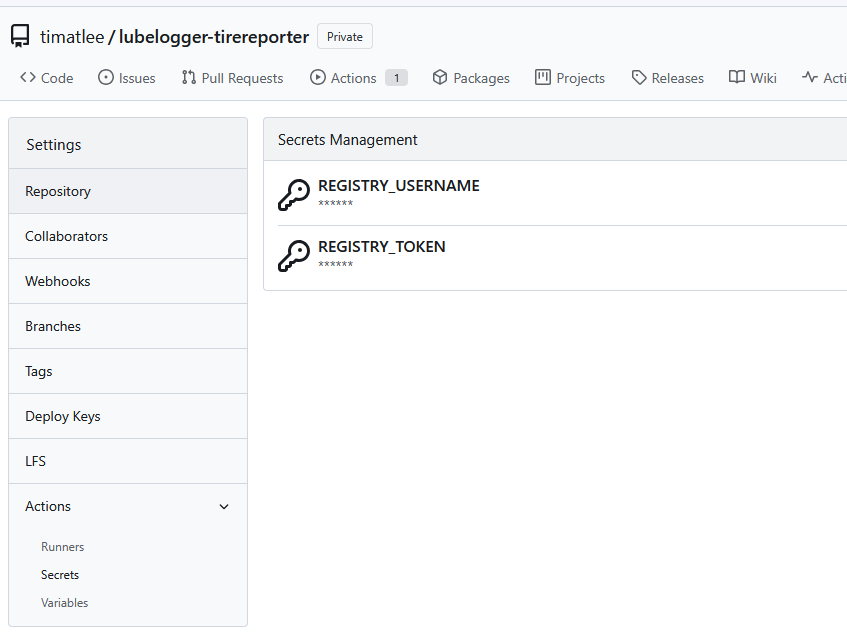

This creates a token, which I put into the repo's secrets. I'm left with the following configured in my repo:

Re-run the action, and we should see a successful action.

A bit more Gitea setup

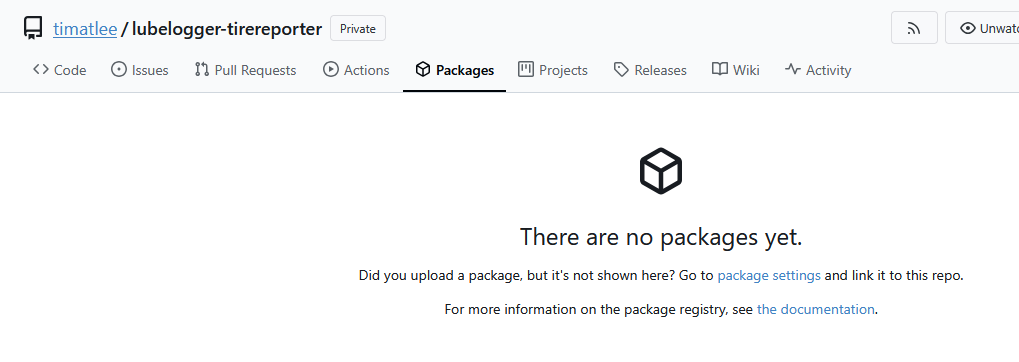

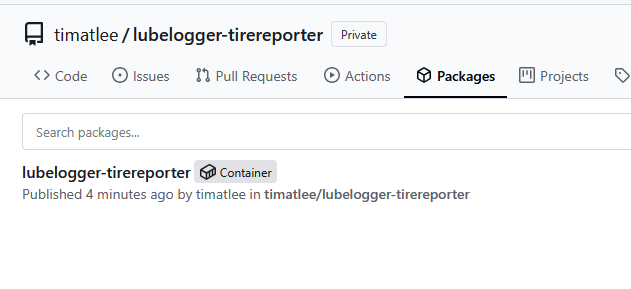

Navigate to the project's package tab, and find that the package isn't listed there. At least we get a hint about where to look for it:

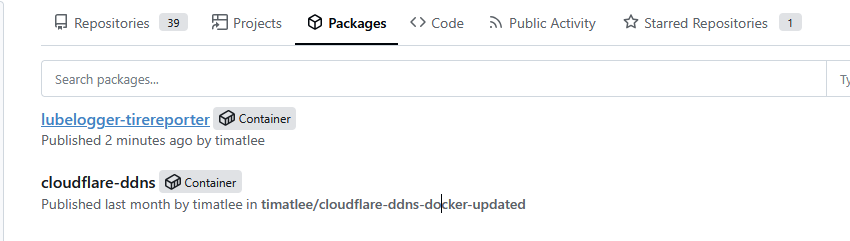

Under my profile's page (https://git.timatlee.com/timatlee/-/packages), the packages I publish are listed:

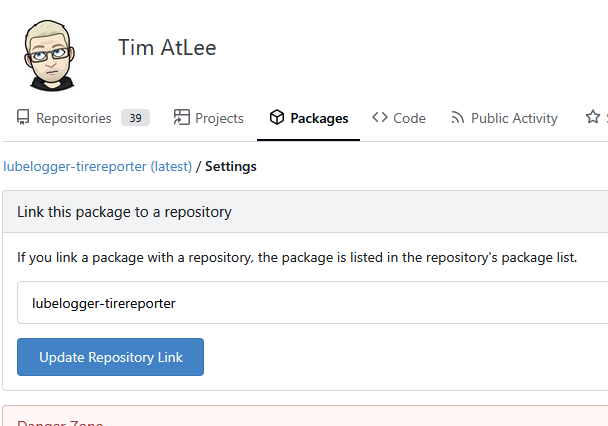

Click into the new package, then into settings, and update the repository link:

And it's now showing in the repository page:

Last bits of testing

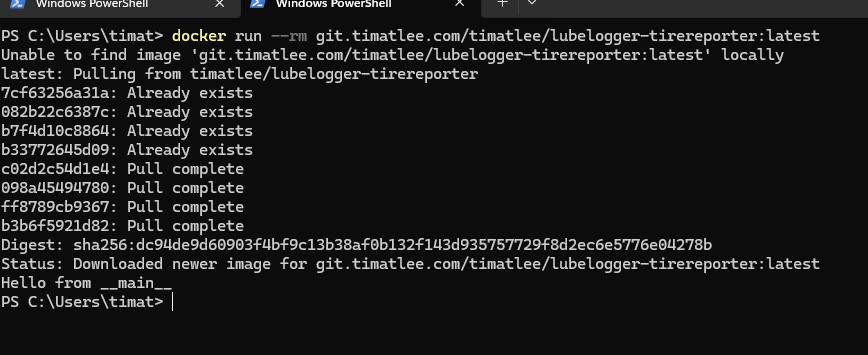

Do some cleanup in docker to make sure that the image, and as many of its layers, are missing, and attempt to run the image with docker run --rm git.timatlee.com/timatlee/lubelogger-tirereporter:latest.

You should see it's output as Hello from __main__, just like when it was first scaffolded:

Congrats, you're now ready to build out whatever this was supposed to do - periodically bug the LubeLogger API, get some data, and email it.

Some parting notes

In Dockerfile, I have used the base image of python:slim. In this state, renovate-bot won't ever update the image when the upstream image changes, because renovate-bot has no idea when the image changes.

As it stands right now, the image will only update when one of the entries in requirements.txt changes (which is blank right now, so .. never). If there is an update to python:slim, we'll never see it.

The recommended approach is Digest Pinning, and ideally we'd even limit it to a major python version. So the first line of Dockerfile now becomes FROM python:3-slim@sha256:f3614d98f38b0525d670f287b0474385952e28eb43016655dd003d0e28cf8652. Renovate-bot will then update the digest when it changes.

Likewise, we run into the same issue in our Kubernetes deployment. Imagine that we are deploying the image with the following cronjob:

1apiVersion: batch/v1beta1

2kind: CronJob

3metadata:

4 name: lubelogger-cronjob

5spec:

6 schedule: "0 0 * * *" # Runs every day at midnight

7 jobTemplate:

8 spec:

9 template:

10 spec:

11 containers:

12 - name: lubelogger

13 image: git.timatlee.com/timatlee/lubelogger-tirereporter:latest

14 restartPolicy: OnFailure

This probably works, since the job is going to run, then exit. On the next scheduled run, the image will get downloaded again, then run - and maybe in the meantime, the image changed. Ideally, we'd tag the image with a digest instead like git.timatlee.com/timatlee/lubelogger-tirereporter@sha256:880ef7e8325073dfc5292372240b6b4f608f3932531b41d958674f1e3ac22568. Note we omit :latest here.